Evaluation

Overview

Understanding evaluation sets and how to use them in Extend

Evaluation sets allow you to reliably and continuously test the accuracy and performance of your AI document processors in Extend.

By creating sets of representative document examples with validated outputs, you can verify that your extraction and classification configs are working as intended and identify areas for improvement,

while also rapidly evaluating changes to see if they have improved the accuracy of your processors.

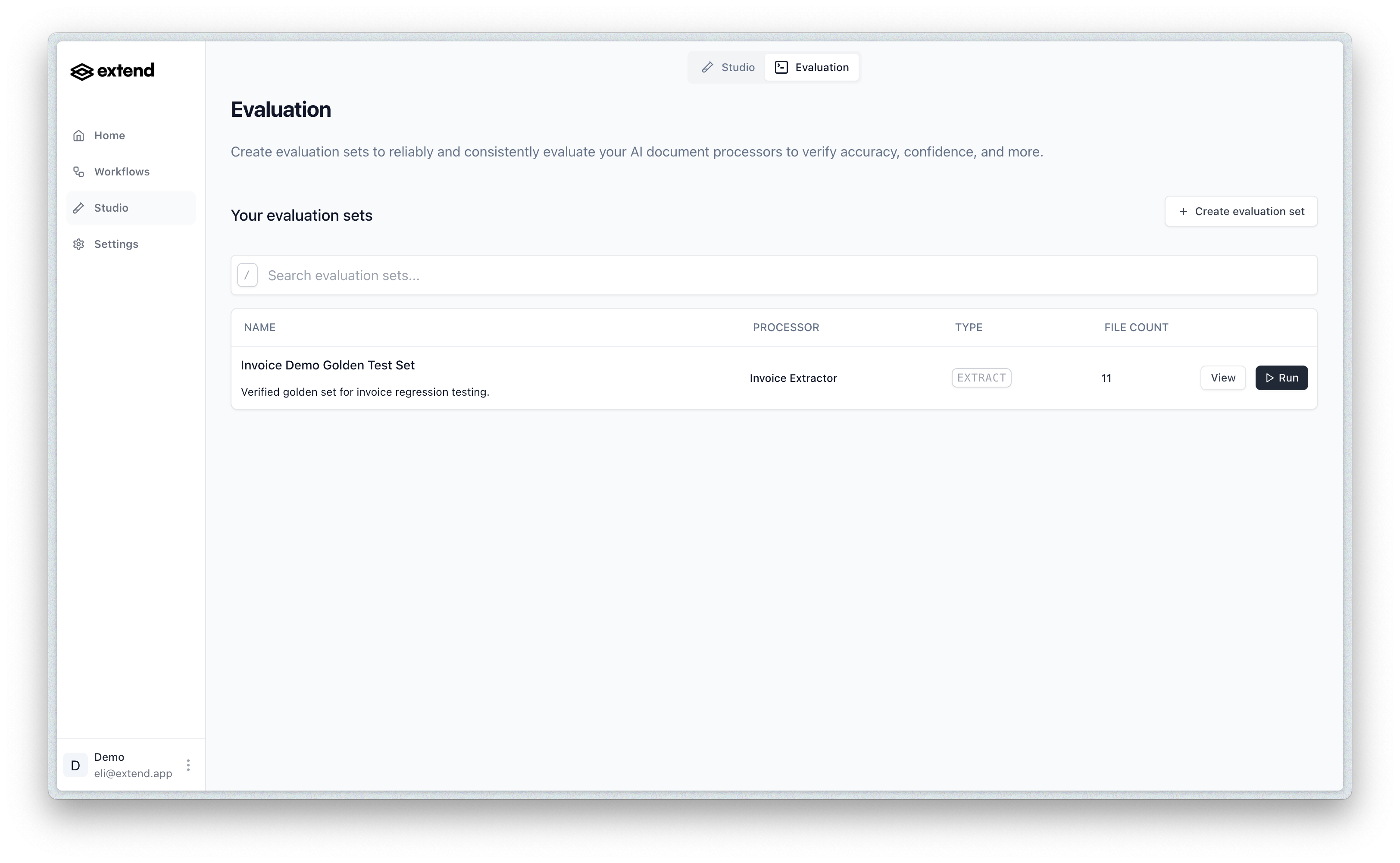

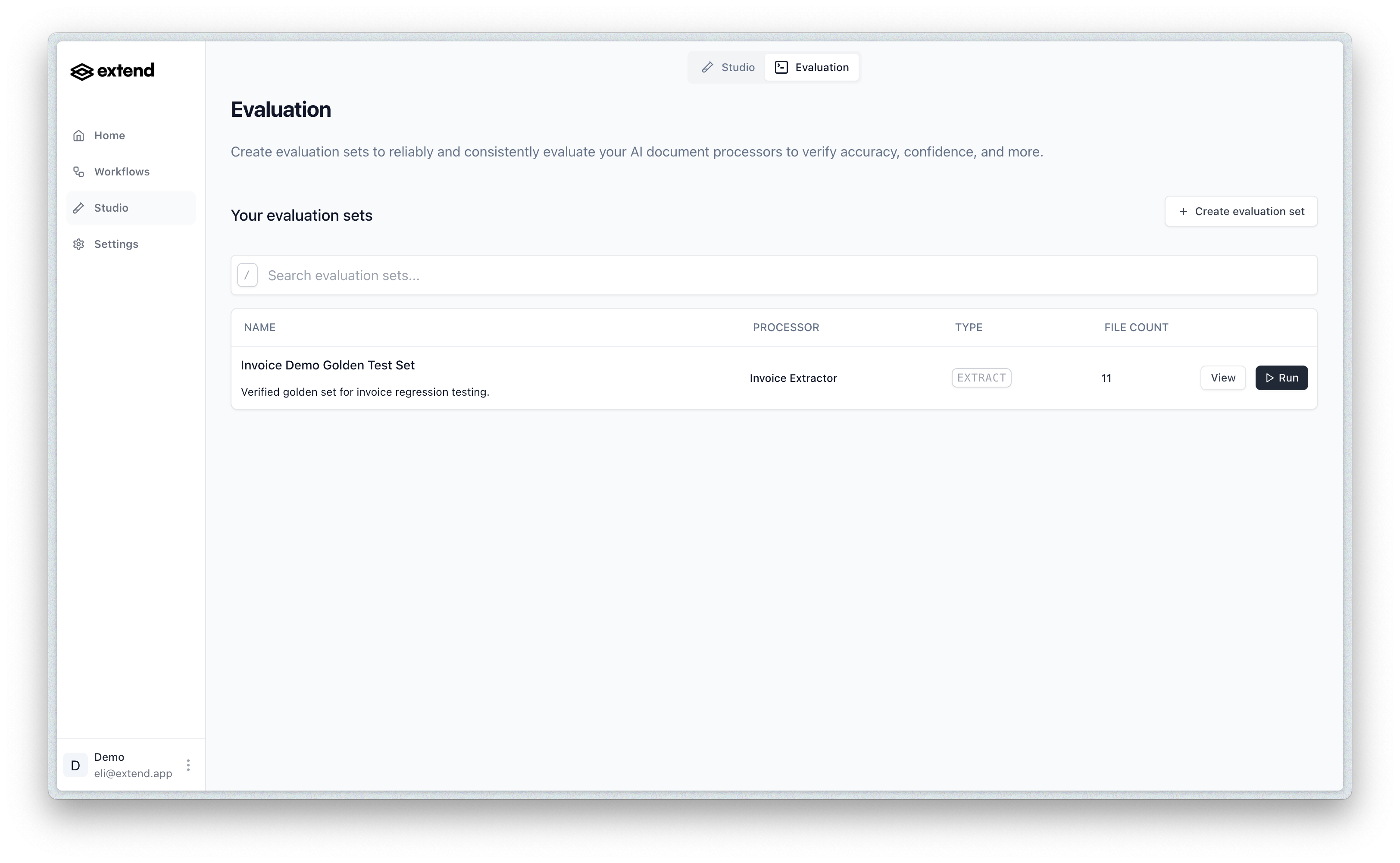

You can view and manage your evaluation sets from the Evaluation page in the Studio section of Extend. Here you’ll find a list of your existing evaluation sets along with options to create new sets and run evaluations.

Evaluation plays a key role in building the best AI document processors for your use cases in Extend:

You can view and manage your evaluation sets from the Evaluation page in the Studio section of Extend. Here you’ll find a list of your existing evaluation sets along with options to create new sets and run evaluations.

Evaluation plays a key role in building the best AI document processors for your use cases in Extend:

You can view and manage your evaluation sets from the Evaluation page in the Studio section of Extend. Here you’ll find a list of your existing evaluation sets along with options to create new sets and run evaluations.

Evaluation plays a key role in building the best AI document processors for your use cases in Extend:

You can view and manage your evaluation sets from the Evaluation page in the Studio section of Extend. Here you’ll find a list of your existing evaluation sets along with options to create new sets and run evaluations.

Evaluation plays a key role in building the best AI document processors for your use cases in Extend:

- Create evaluation sets containing examples that represent the range of documents your processor needs to handle

- Run evaluations on your sets to test processor accuracy and performance

- Review evaluation results to verify processor outputs match expected results, and identify areas for improvement and common errors

- Iterate on processor configuration and training data to improve accuracy based on evaluation insights