Evaluation

Running evaluation sets

How to run evaluation sets in Extend

Running well maintained and up to date evaluation sets is one of the best ways to ensure that your AI document processors are performing as expected and to identify areas for improvement, while iterating.

Follow this guide to learn more about how to run and maintain evaluation sets in Extend.

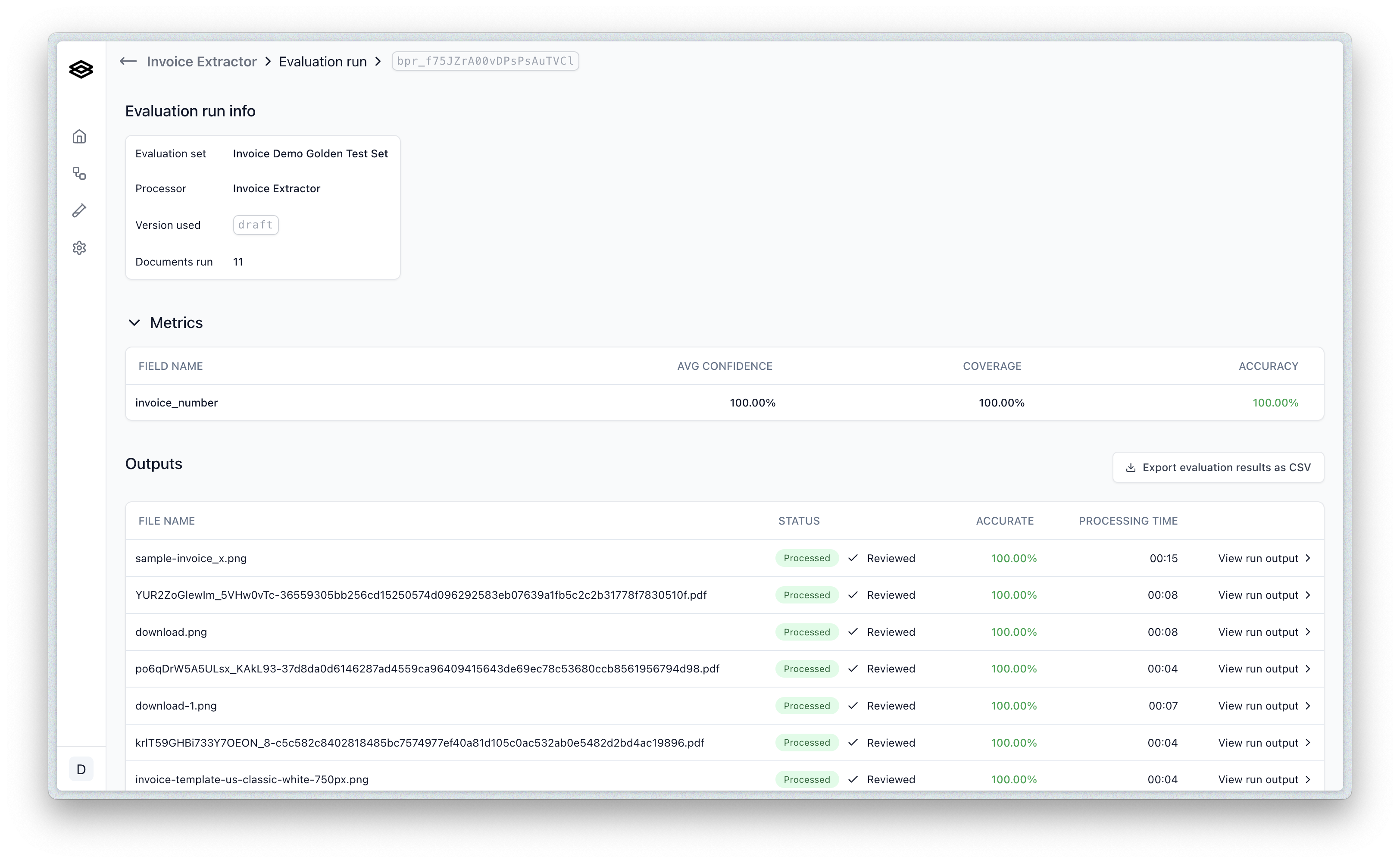

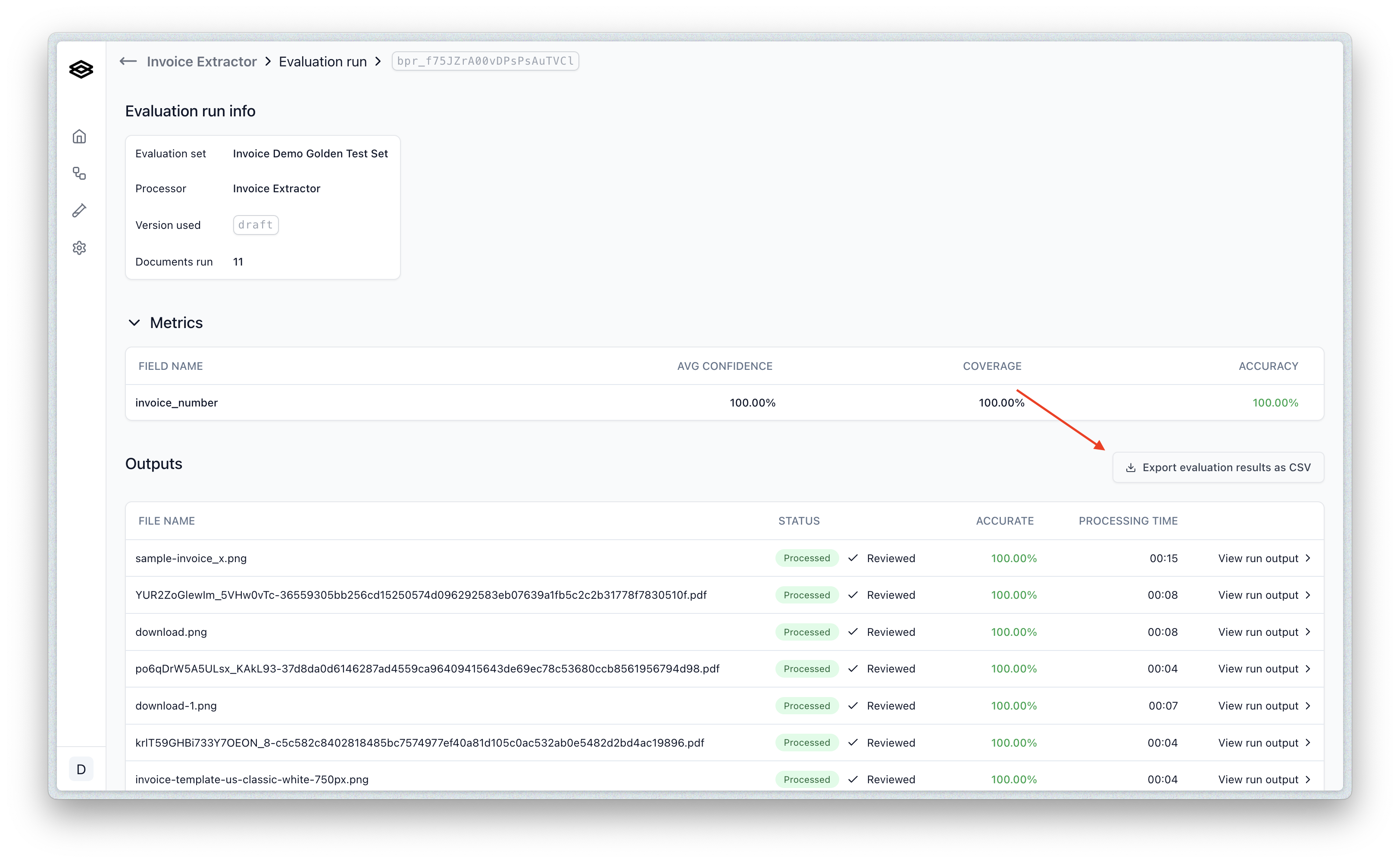

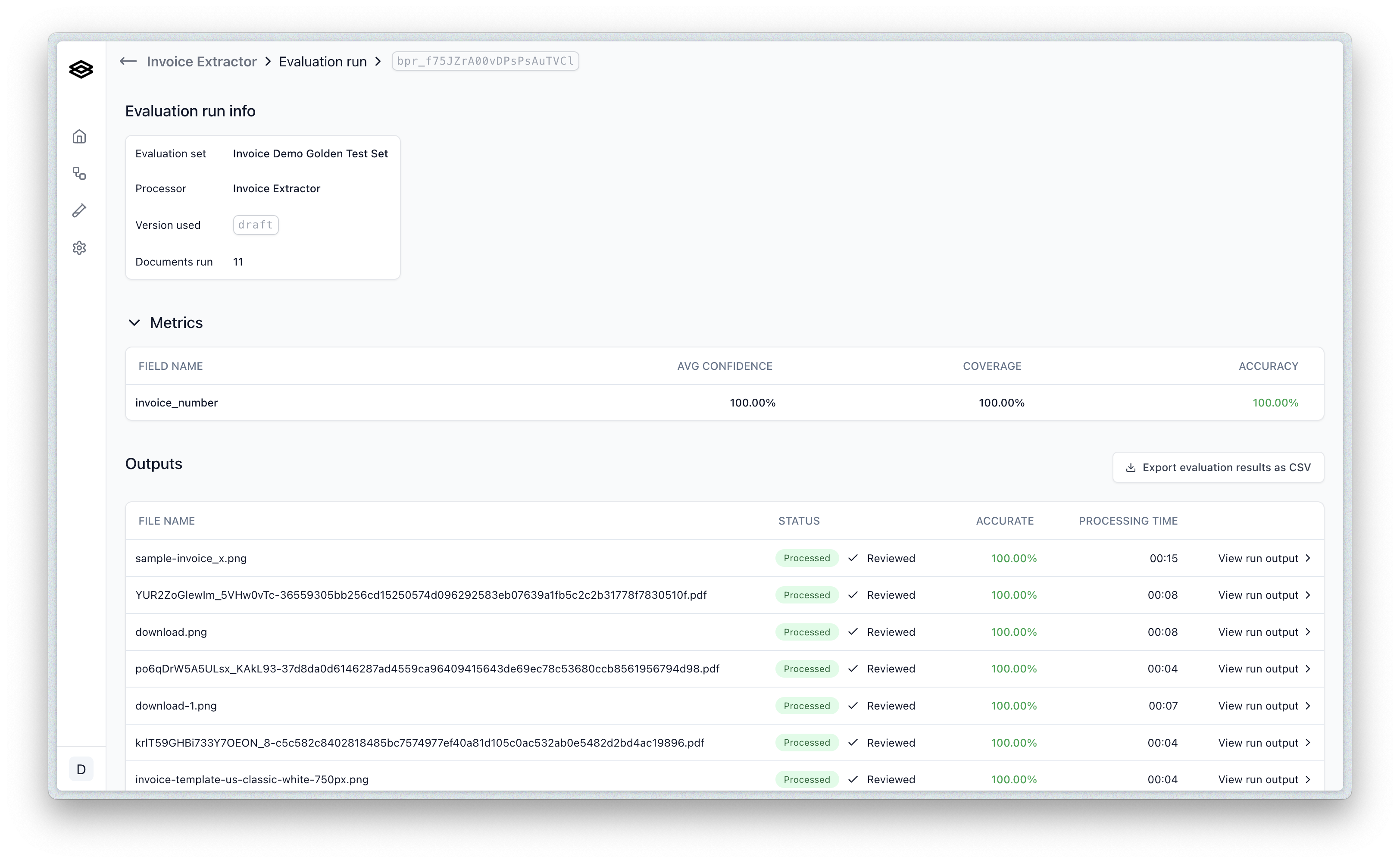

This is a very simple extraction processor for demo purposes, but you can see a metrics summary at the top of the page, and a list of documents that were run and their results below.

Each processor type will have different metrics views. For instance a classification processor will show a per type accuracy distribution and a confusion matrix-like view.

This is a very simple extraction processor for demo purposes, but you can see a metrics summary at the top of the page, and a list of documents that were run and their results below.

Each processor type will have different metrics views. For instance a classification processor will show a per type accuracy distribution and a confusion matrix-like view.

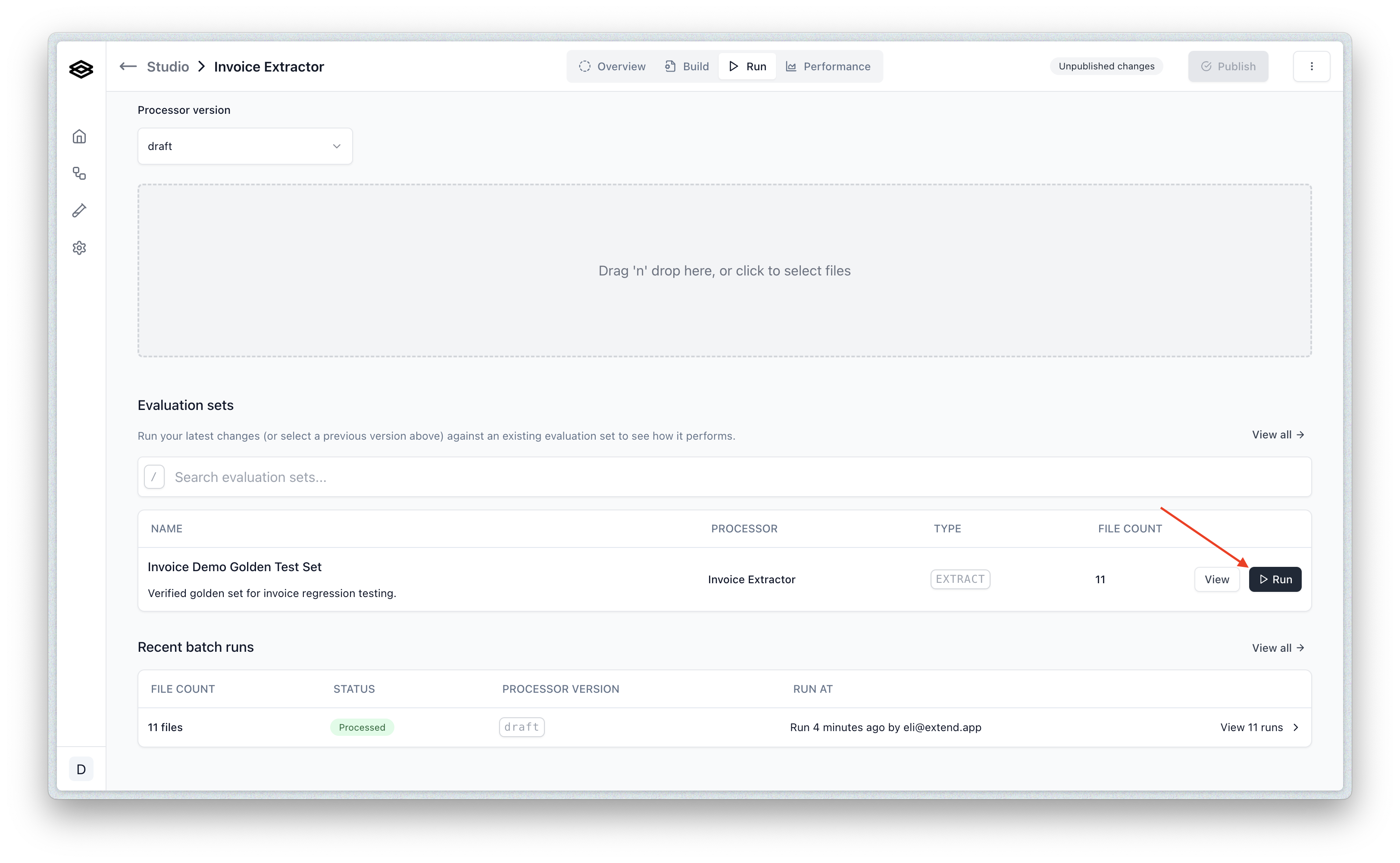

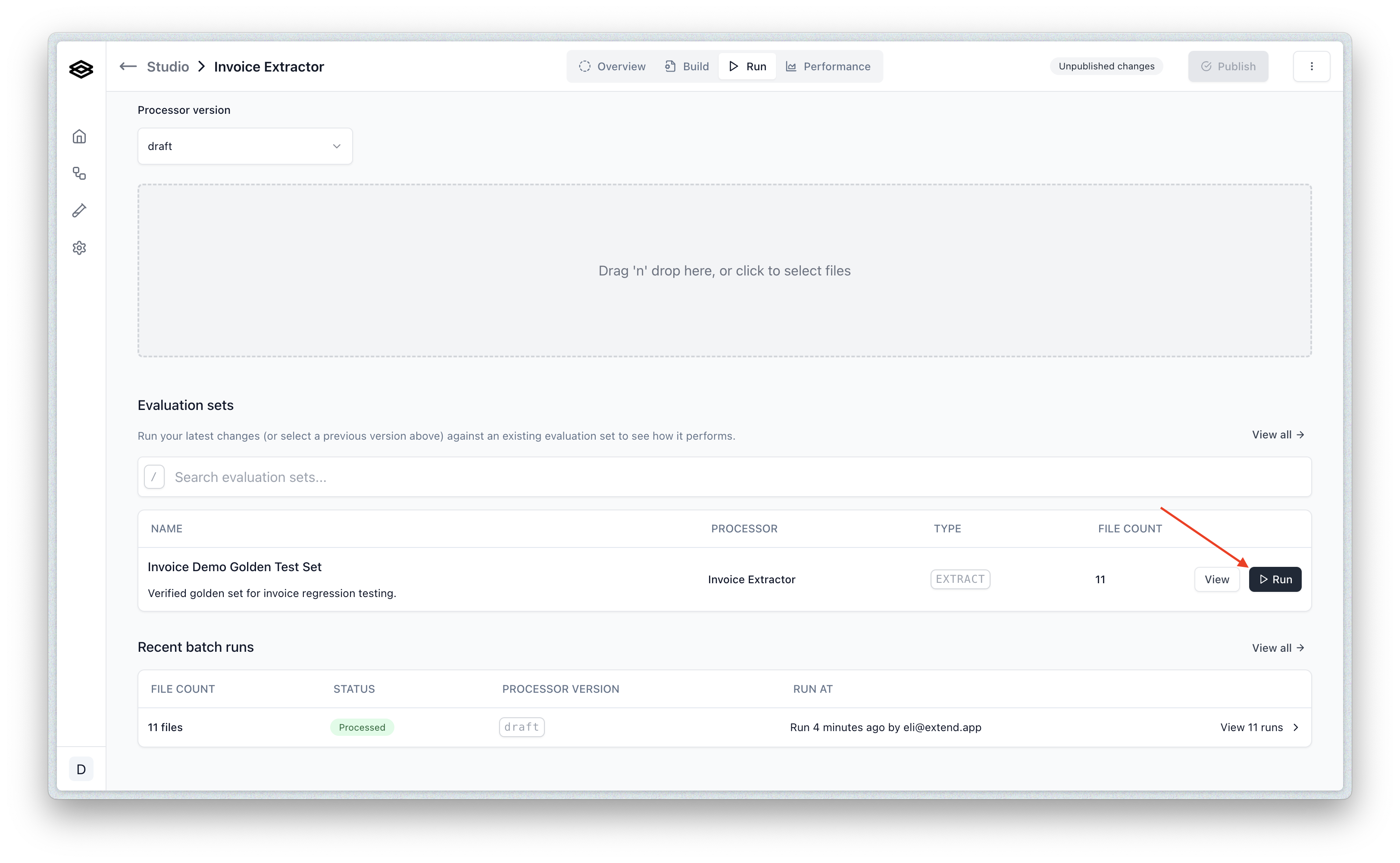

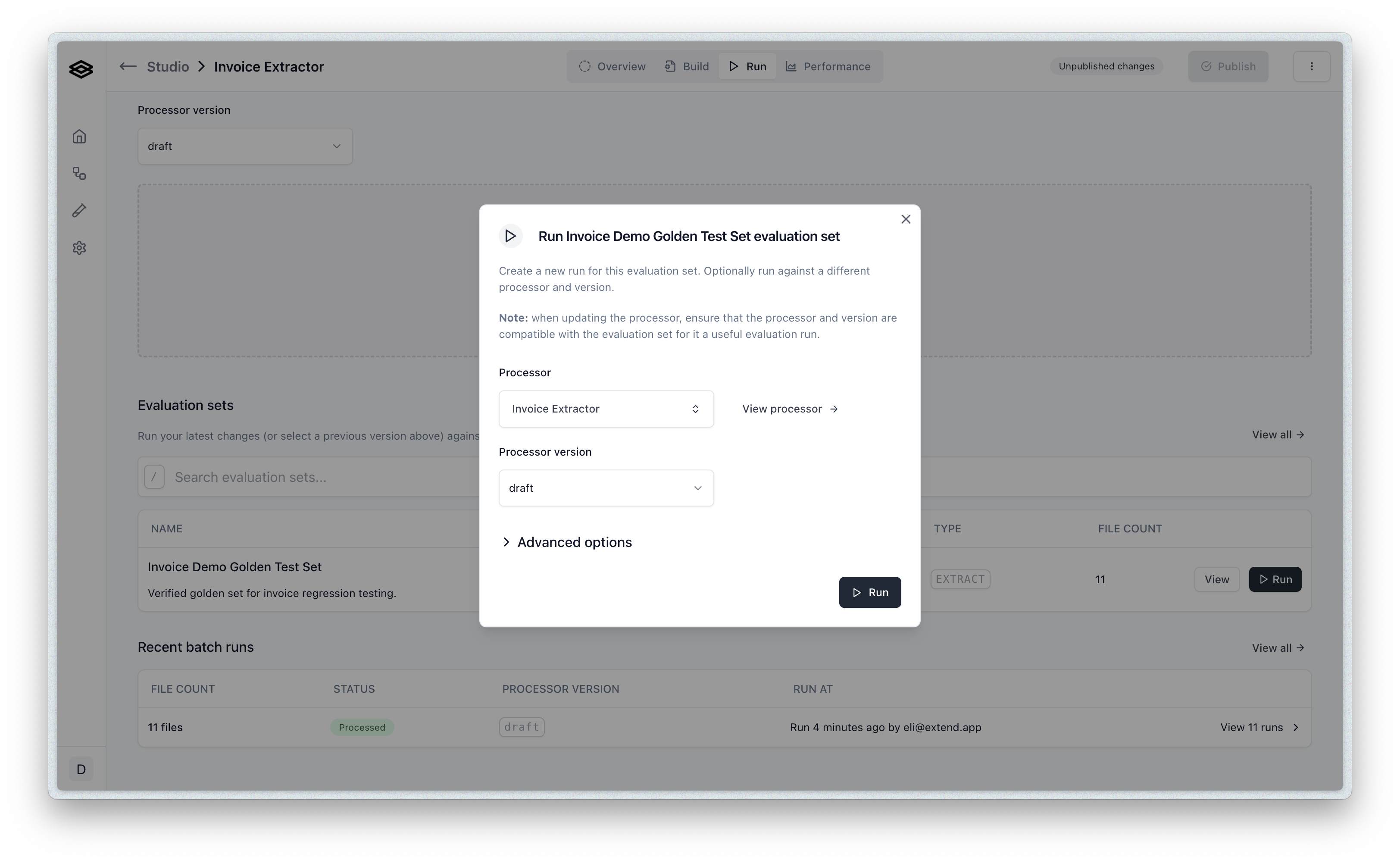

- Navigate to the runner page for the processor that has the evaluation set tied to it.

- Click the “Run” button on the evaluation set you want to run. This will open the run dialog.

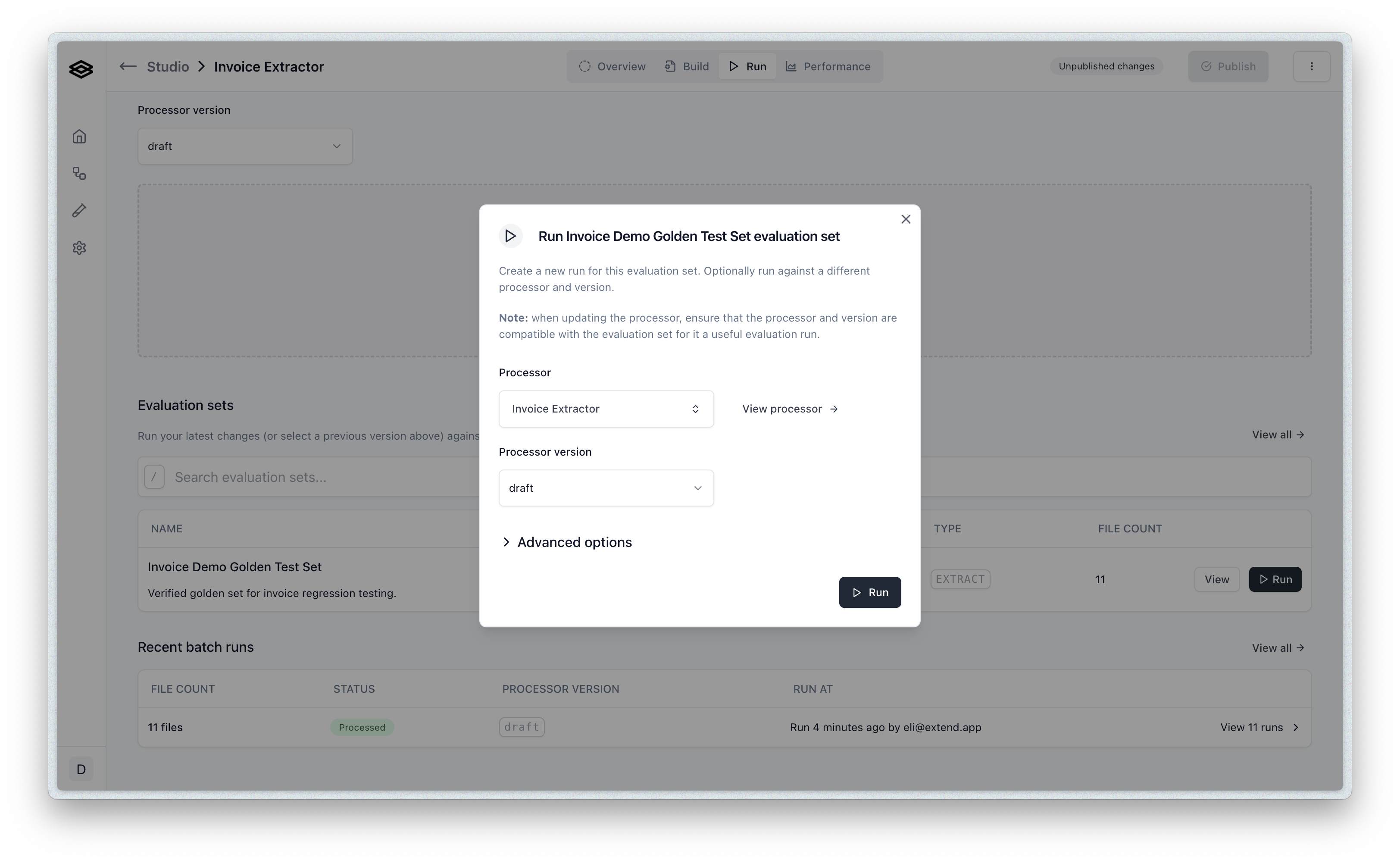

- The default options are the processor and version you selected in the run ui. You can however update the version, or the actual processor before running here.

- Click “Run Evaluation” to start the evaluation. You will be redirected to the evaluation run page where you can view the progress of the evaluation.

This is a very simple extraction processor for demo purposes, but you can see a metrics summary at the top of the page, and a list of documents that were run and their results below.

Each processor type will have different metrics views. For instance a classification processor will show a per type accuracy distribution and a confusion matrix-like view.

This is a very simple extraction processor for demo purposes, but you can see a metrics summary at the top of the page, and a list of documents that were run and their results below.

Each processor type will have different metrics views. For instance a classification processor will show a per type accuracy distribution and a confusion matrix-like view.

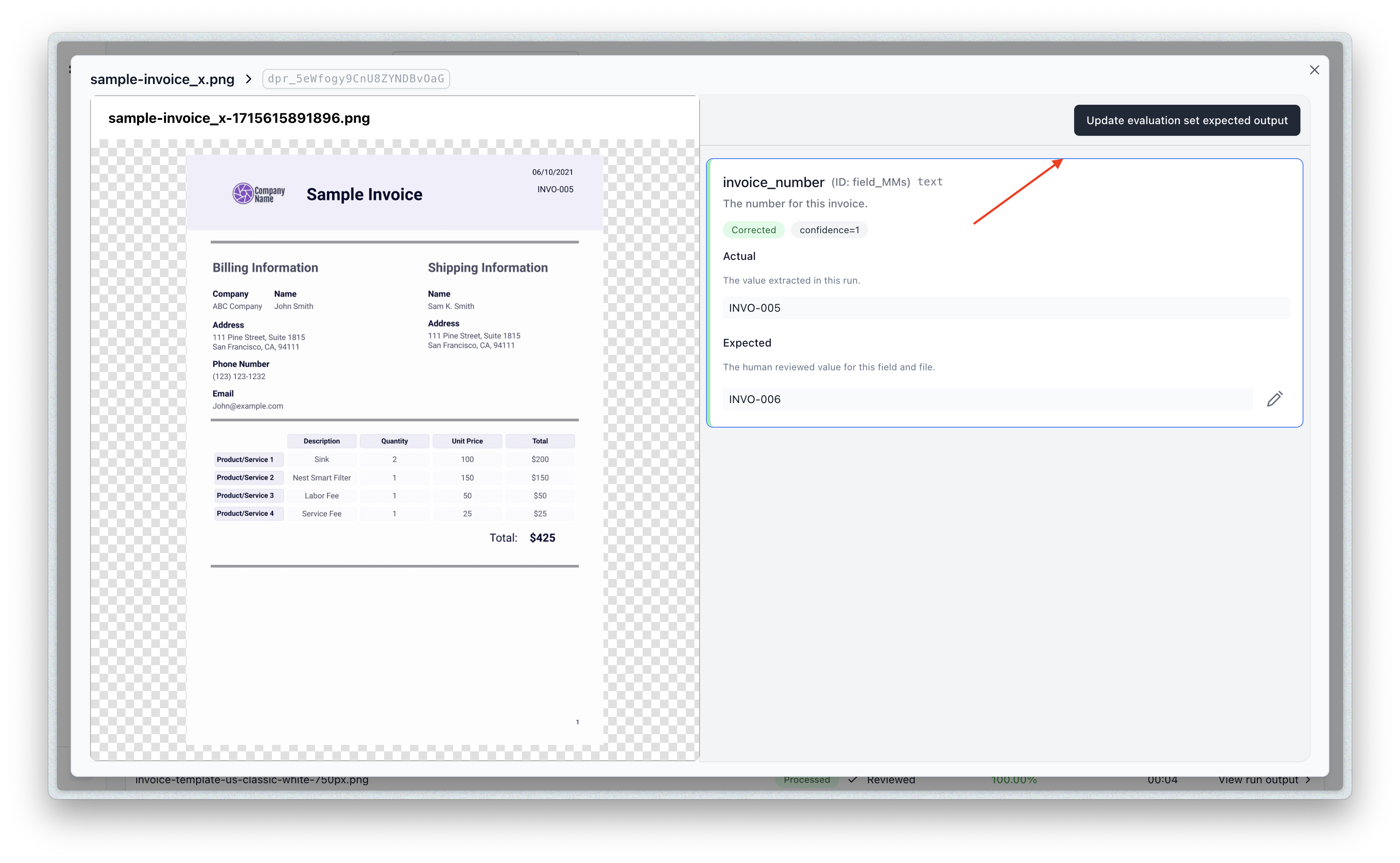

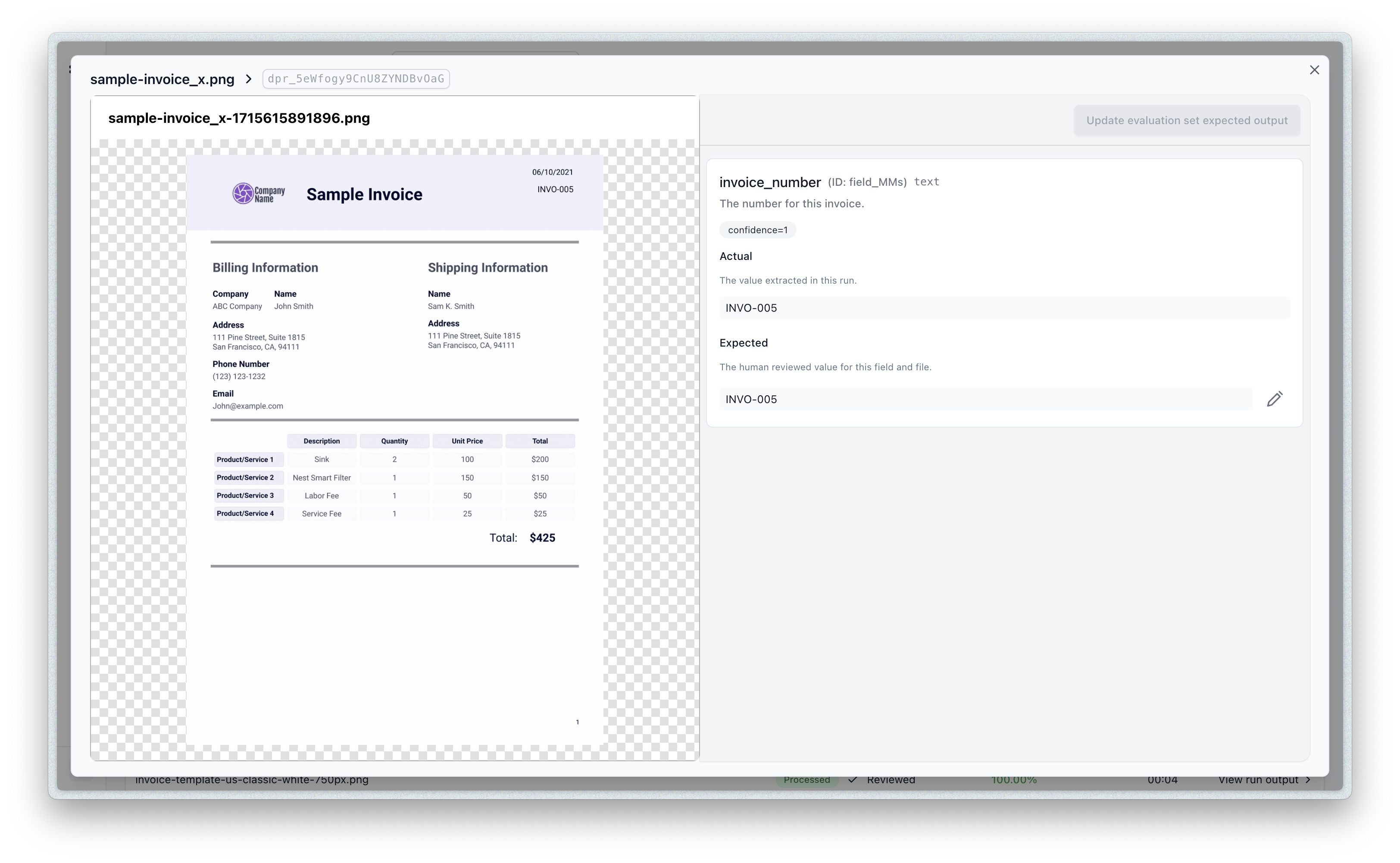

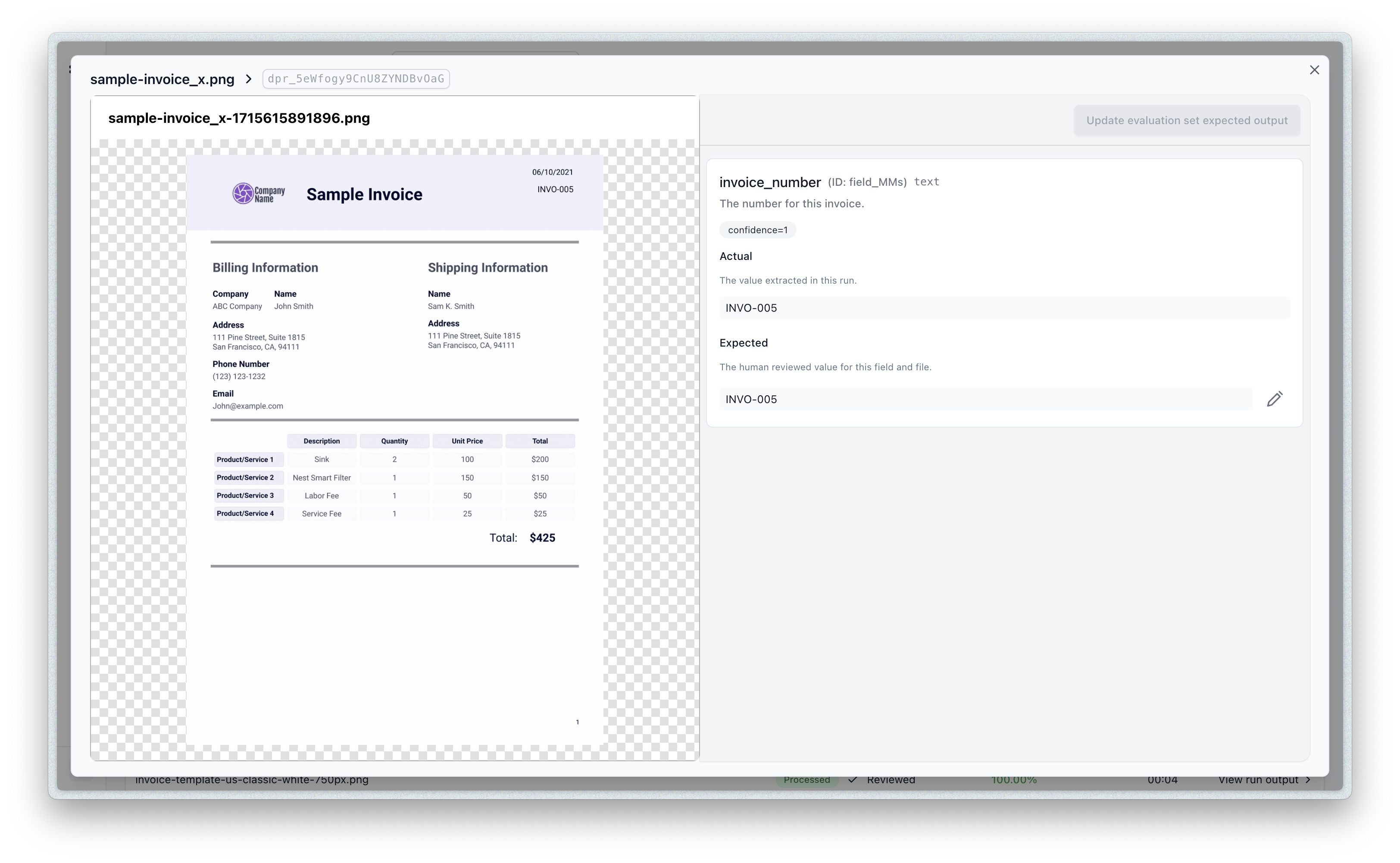

- You can click on a given row to view the details of actual vs. expected outputs for that document.

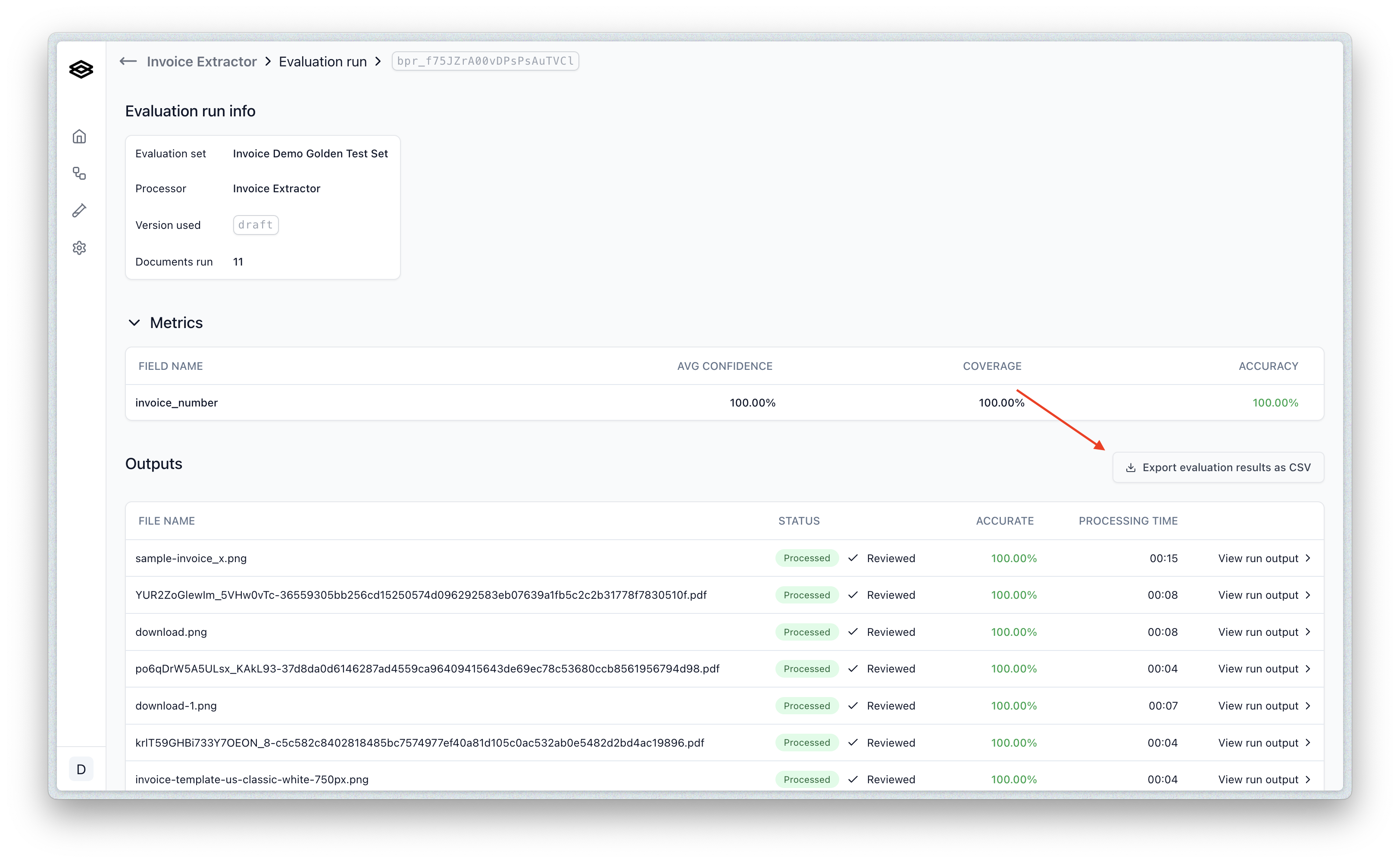

- You can also download the results of the evaluation as a CSV file by clicking the Export button:

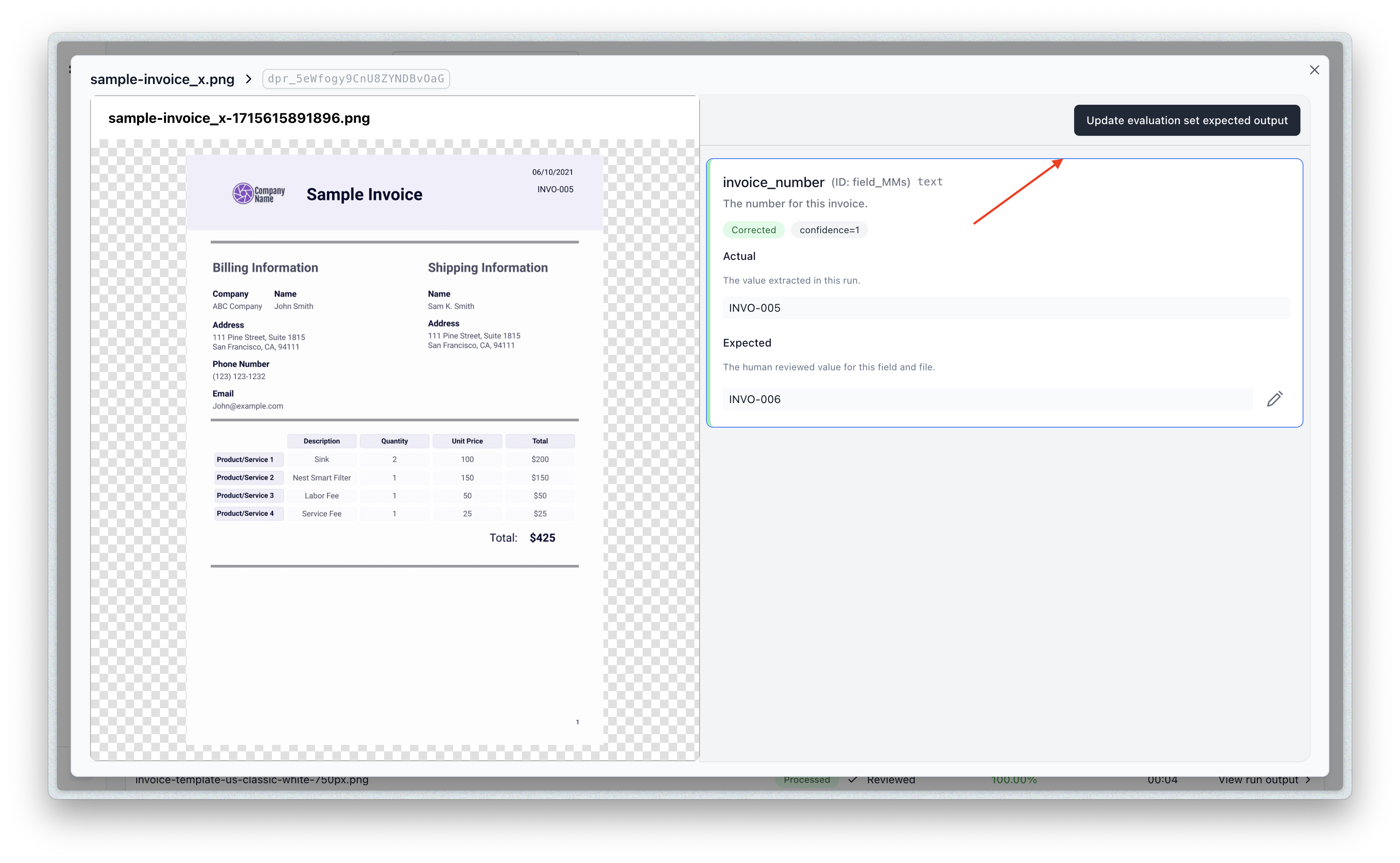

- You can also update the eval item to take the results of the evaluation as the new expected outputs by clicking the “Update” button: